Experts caution that artificial intelligence-generated images, audio, and video could impact upcoming elections. OpenAI is introducing a tool to identify content produced by its DALL-E image generator but acknowledges it’s just a small step in combating deepfakes.

OpenAI plans to share its deepfake detector with disinformation researchers to refine it through real-world testing. Sandhini Agarwal, an OpenAI researcher, emphasizes the need for ongoing research in this area.

The new detector can identify 98.8% of DALL-E 3 images accurately but isn’t designed for other generators like Midjourney and Stability.

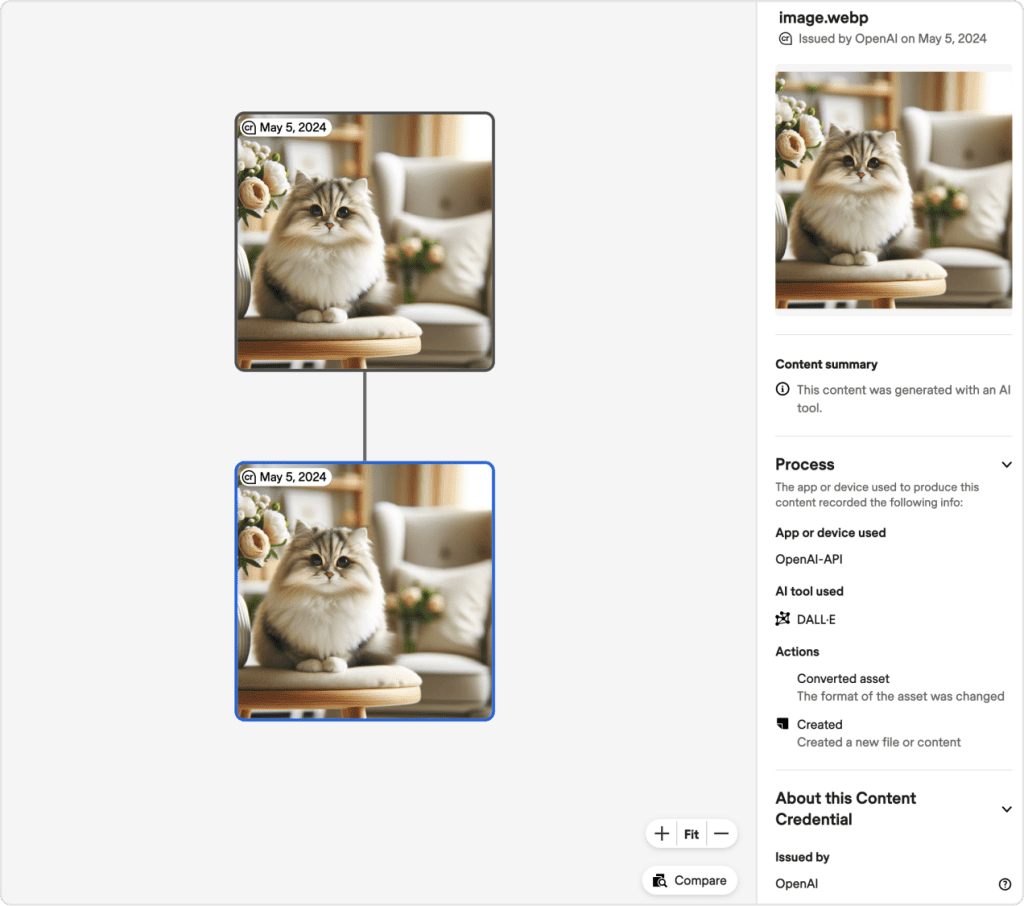

Given the inherent limitations of probability-based detection, OpenAI, along with tech giants like Google and Meta, is exploring alternative strategies. They’re involved in initiatives like the Coalition for Content Provenance and Authenticity (C2PA), aiming to establish standards for verifying digital content, including AI-generated media.

OpenAI is also working on watermarking AI-generated sounds for real-time identification, aiming to make these watermarks tamper-resistant.

As pressure mounts on the AI industry to address the proliferation of misleading content, there’s a growing urgency for solutions. Recent instances of AI-manipulated content influencing elections in various countries underscore the need for better monitoring and tracing mechanisms.

While OpenAI’s deepfake detector is a step forward, it’s not a complete solution. Agarwal emphasizes that combating deepfakes requires a multifaceted approach, as there’s no single fix.