Google’s annual developer conference, I/O, concluded on May 14th, 2024, leaving a trail of exciting announcements and glimpses into the future of technology. But one name stood out amongst the flurry of innovations: Gemini.

Developed by Google DeepMind, Gemini is not just another large language model (LLM). It’s a family of AI models built from the ground up to be multimodal, seamlessly understanding and processing information across different formats like text, code, audio, images, and even video. This marks a significant leap from previous LLMs that primarily focused on text-based interactions.

The Power of Multimodality

Imagine searching for a recipe and not only getting text instructions but also an interactive video demonstration. Or, picture a world where debugging code involves natural language conversations with the AI, pointing out potential errors visually within the code itself. This is the power of multimodality that Gemini brings to the table.

Here’s a deeper dive into how this multimodality empowers Gemini across various applications:

- Enhanced Search: Gemini can analyze text queries alongside images or videos. Imagine searching for “best hiking trails near Yosemite” and getting results that include not only text descriptions but also immersive video previews of the trails.

- Revolutionizing Creativity: Artists and designers can leverage Gemini’s ability to understand and generate different media formats. Imagine sketching a rough design and having Gemini translate it into a high-fidelity image or generating variations based on your preferences.

- Breaking Language Barriers: Imagine seamlessly translating not just text, but spoken conversations across languages, incorporating cultural nuances and even translating on-screen text within videos.

- Supercharging Developers: Software developers can utilize Gemini’s code comprehension capabilities to write better code faster. Imagine debugging code by having natural conversations with Gemini, pointing out potential errors visually within the code itself.

The Gemini Family: A Model for Every Need

The beauty of Gemini lies in its versatility. Google DeepMind has released a suite of models under the Gemini umbrella, each tailored to specific needs:

- Gemini Ultra: This is the heavyweight champion, boasting the most processing power for tackling highly complex tasks requiring immense data analysis and manipulation.

- Gemini Pro: This is the all-rounder, offering excellent performance across a wide range of tasks and ideal for most user applications.

- Gemini Flash: This lightweight model prioritizes speed and efficiency, making it perfect for on-device applications where processing power is limited.

- Gemini Nano: The most power-efficient variant, designed specifically for running AI tasks directly on mobile devices without compromising battery life.

Gemini in Action: A Glimpse into the Future

Google I/O wasn’t just about showcasing Gemini’s capabilities; it was about demonstrating how this technology will be integrated into our daily lives. Here are a few exciting examples:

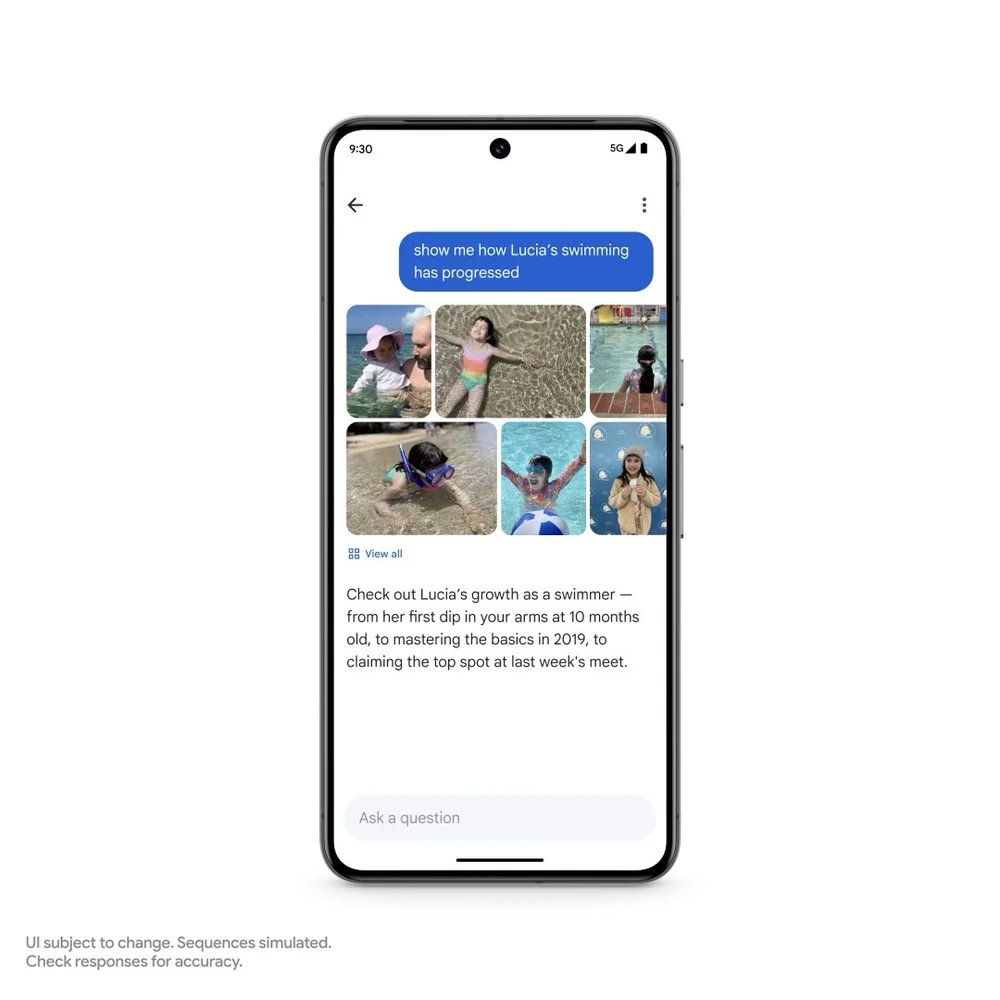

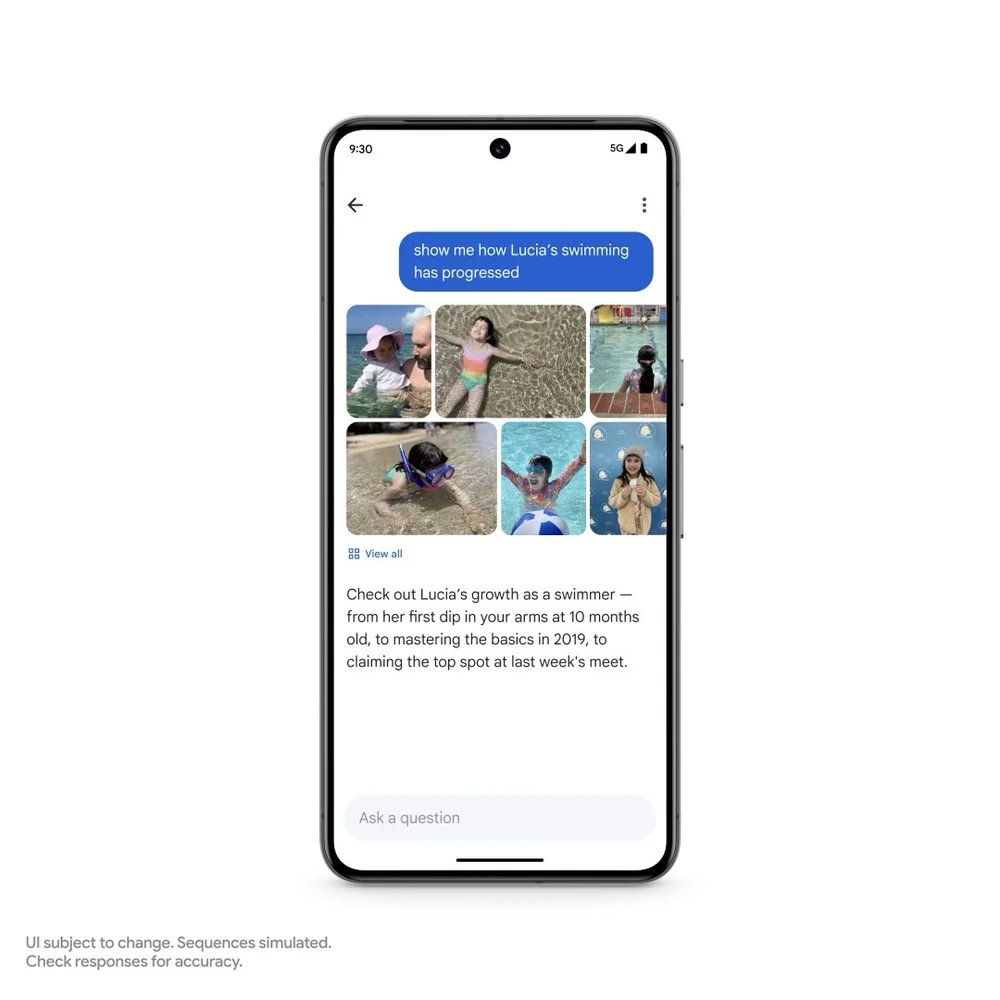

- Pixel Gets a Pixie: Google introduced a new AI assistant called Pixie for Pixel phones. Powered by Gemini technology, Pixie goes beyond simple voice commands, offering a more conversational and intuitive user experience.

- Smarter Search, Enhanced Chrome: Gemini will be integrated into the Chrome browser, providing a more intelligent search experience that understands context and user intent better. Imagine searching for a product and getting real-time price comparisons alongside reviews and user experiences – all within the same window.

- The Power of Personalization: Imagine educational platforms that adapt to your learning style, automatically generating different content formats like text, video, or interactive exercises based on your progress.

Beyond the Hype: Addressing the Challenges

As with any groundbreaking technology, Gemini raises important questions: bias, explainability, and accessibility. Google acknowledges these challenges and has outlined its commitment to developing responsible AI practices. They emphasize the importance of diverse training data sets to minimize bias and ensure fair outcomes. Additionally, they are working on methods to explain Gemini’s reasoning, making its decision-making process more transparent. Finally, they plan to make Gemini accessible across different platforms and devices, ensuring everyone can benefit from this technological leap.

The Road Ahead: A Future Powered by Multimodality

The arrival of Gemini signifies a paradigm shift in the field of AI. Its ability to seamlessly process and generate information across various formats opens doors to a future of richer user experiences, enhanced creativity, and groundbreaking solutions across industries. While challenges remain, Google’s commitment to responsible AI development paves the way for a future where this powerful technology empowers humanity.

This is just the beginning of the Gemini story. As developers and researchers explore its full potential, we can expect even more exciting applications and innovations on the horizon. The world of multimodality has arrived, and Google’s Gemini is at the forefront, shaping the future of AI.