Introduction

Alibaba, the renowned Chinese e-commerce giant, continues to be a formidable force in China’s AI domain. Today, it revealed its latest Alibaba’s Qwen2 AI Model, which is being hailed as the leading open-source option currently available.

Qwen2 Development and Capabilities

Developed by Alibaba Cloud, Qwen2 is the latest iteration in the Tongyi Qianwen (Qwen) model series. This series includes the Tongyi Qianwen LLM, the vision AI model Qwen-VL, and Qwen-Audio. The Qwen models are pre-trained on multilingual data across various industries, with Qwen-72B being the most powerful model, trained on an astounding 3 trillion tokens.

Benchmark Performance

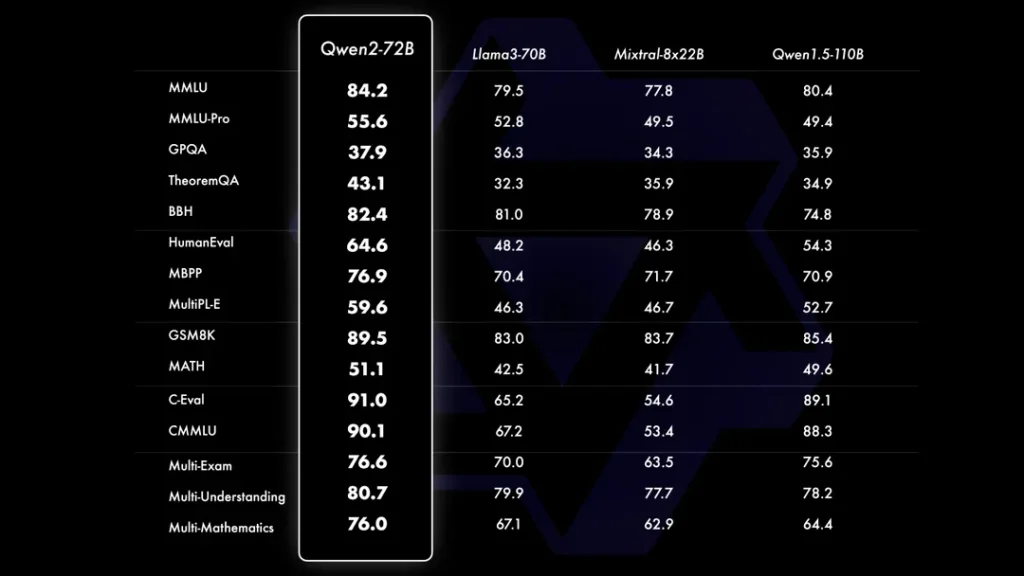

The Qwen2 model can handle 128K tokens of context, comparable to OpenAI’s GPT-4o. It has outperformed Meta’s LLama3 in most synthetic benchmarks, according to the Qwen team, establishing itself as the top open-source model available. However, the independent Elo Arena ranks Qwen2-72B-Instruct slightly below Llama3 70B and GPT-4-0125-preview, making it the second most favored open-source LLM among human testers.

Model Variations and Improvements

Qwen2 is available in five sizes, ranging from 0.5 billion to 72 billion parameters. The release brings significant advancements in various domains. Additionally, the models are now trained in 27 more languages, including German, French, Spanish, Italian, and Russian, alongside English and Chinese.

Competitive Edge

“Compared with state-of-the-art open-source language models, including the previously released Qwen1.5, Qwen2 has generally surpassed most open-source models and shown competitiveness against proprietary models across benchmarks targeting language understanding, language generation, multilingual capability, coding, mathematics, and reasoning,” stated the Qwen team on HuggingFace.

Contextual Understanding and Performance

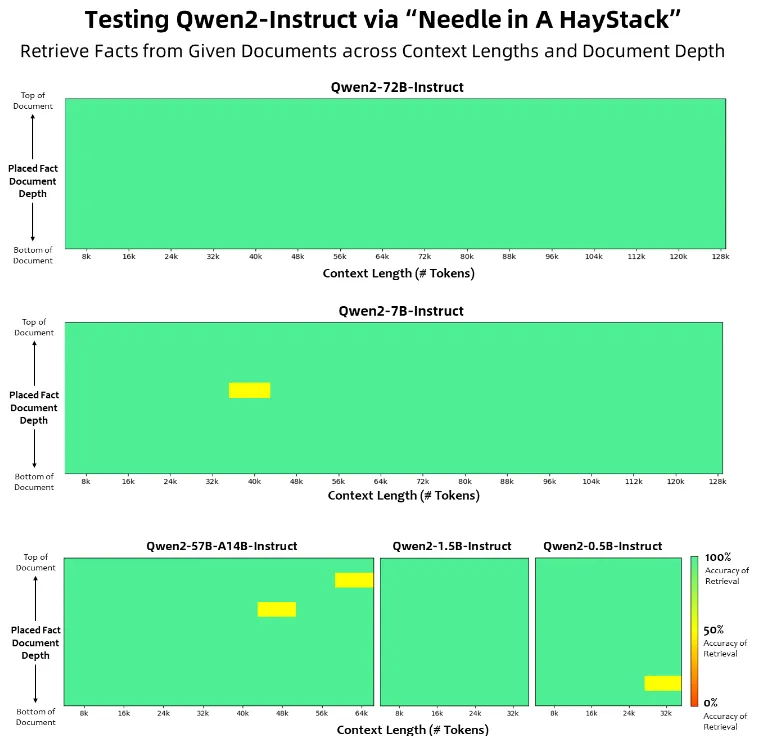

The Qwen2 models demonstrate remarkable comprehension of long contexts. Qwen2-72B-Instruct excels in information extraction tasks within its extensive context without errors, passing the “Needle in a Haystack” test nearly flawlessly. This is crucial as model performance typically declines with increased interaction.

Licensing Changes

With this release, Alibaba has updated the licensing for its models. While Qwen2-72B and its instruction-tuned variants retain the original Qianwen license, all other models have adopted the Apache 2.0 license, a standard in open-source software.

Future Plans and Multimodality

Alibaba Cloud plans to continue open-sourcing new models to advance open-source AI. The next upgrade will introduce multimodality to the Qwen2 LLM, potentially merging all family models into one powerful entity. “Additionally, we extend the Qwen2 language models to multimodal, capable of understanding both vision and audio information,” the team added.

Availability and Testing

Qwen2 is available for online testing via HuggingFace Spaces. Those with sufficient computing power can download the weights for free from HuggingFace. Decrypt tested the model and found it highly capable of understanding tasks in multiple languages. The model is also censored for sensitive themes in China, aligning with Alibaba’s claims of Qwen2 being the least likely to produce unsafe results.

Conclusion

The Qwen2 model offers a robust alternative for those interested in open-source AI. Its larger token context window surpasses most other models, including Meta’s LLama 3. The permissive licensing allows for fine-tuned versions shared by others, potentially enhancing its performance and reducing bias further.